Max Boonen is founder and CEO of crypto trading firm B2C2. This post is the first in a series of three that looks at high-frequency trading in the context of the evolution of crypto markets. Opinions expressed within are his own and do not reflect those of CoinDesk.

The following article originally appeared in Institutional Crypto by CoinDesk, a free weekly newsletter for institutional investors focused on crypto assets. You can sign up here.

Matthew Trudeau, chief strategy officer of ErisX, offered a thoughtful response last month to a CoinDesk article about high-frequency trading in crypto. In short, CoinDesk reported that features linked to high-frequency trading in conventional markets were making an entry on crypto exchanges and that this might be bad news for retail investors.

While I agree with Trudeau that, in general, “automated market making and arbitrage strategies create greater efficiency in the market,” I disagree with his assertion that applying the conventional markets’ microstructure blueprint will improve liquidity in crypto.

I will explain below that, pushed to their limit, the benefits of speed brought about by electronification actually impair market liquidity as they morph into latency arbitrage. It is inevitable that crypto markets become much faster, but there is a significant risk that some exchanges overshoot and end up hurting their customer base, re-learning the lessons of the conventional latency wars a little too late. Those who do will lose market share to electronic OTC liquidity providers and alternative microstructures, which I will present in this introductory post.

A brief history of the latency arms race

Starting in the mid 1990s, innovative firms such at GETCO revolutionised the US equity market by automating the process of market making, traditionally the remit of humans on the floor of the New York Stock Exchange. Those new entrants started by scraping information from the exchanges’ websites, before the APIs and trading protocols that we now take for granted.

Electronic trading firms quickly realised that faster participants would thrive. If new information originated in Chicago’s exchanges could be processed more rapidly, not only could a trading firm adjust its passive quotes there before everyone else, it could also trade against the stale orders of slower traders in New York who could not adjust their quotes in time, picking them off thanks to that speed advantage. This is known as latency arbitrage.

Trudeau reproduces a great graph from a 2014 BlackRock paper, itself referencing a 2010 SEC review of market structure. At the time, it was becoming clear that passive market making, a socially useful (“constructive”) activity, and the by-product of aggressive latency arbitrage, were two sides of the same HFT coin.

This dynamic started a frantic race to the bottom in terms of latencies, where HFT firms invested hundreds of millions of dollars first in low-latency software, followed by low-latency hardware (GPUs then FPGAs) and low-latency communication networks, such as dedicated “dark fibre” lines (Spread Networks, 2010) and radio-frequency towers (McKay Brothers, 2012). (Private networks already existed; the arrival of commercially available ones is used as a reference point.)

Why is latency arbitrage harmful?

Prices are formed by the interaction of liquidity providers and liquidity consumers or takers. Various types of takers operate on a spectrum between the latency-insensitive long-term investors, with horizons in months or years, to the fastest high-frequency takers who engage in latency arbitrage.

The business model of liquidity providers is to bridge the gap in time between buyers and sellers. Without those market makers, investors would not be able to transact efficiently as buyers and sellers rarely wish to transact in opposite directions exactly at the same time. In fact, without an OTC market, how would they agree a price?

Attempts to build investor-to-investor platforms in conventional markets have broadly failed. In compensation for taking the risk that prices may move, market makers endeavour to capture a spread. The spread set by the makers is paid by the takers and depends, inter alia, on volatility, volumes and, crucially, on the degree to which takers are on average informed about the direction of the market in the short-run (“toxicity”). Latency arbitrageurs are naturally informed about short-term direction, having witnessed price changes in another part of the market fractions of a second before others can.

Market makers concern themselves with what the fair clearing price would be and how much spread is required to compensate for a given amount of risk. They employ quantitative techniques to refine and automate this process. Latency arbitrageurs are primarily attentive to the relative direction of related markets on short time horizons, and invest in speed technology first and foremost.

Michael Lewis’ book, Flash Boys, famously paints a rather negative picture of the HFT industry and its impact on investors. I happen to disagree with Michael Lewis – but critics of HFTs have a point. While automation in market making has reduced spreads significantly for retail investors compared to the pre-internet era, it is the winner-takes-all nature of the latency arms race that is damaging to liquidity past a certain point.

The BlackRock chart presented earlier puts arbitrage on a spectrum from constructive statistical arbitrage to structural strategies that include latency arbitrage and worse, such as intentionally clogging up exchange data feeds with millions of orders to make it difficult for slower participants to process market data in real time.

The problem with latency arbitrage is that it is now mostly a battle of financial clout. As exchange technology improved to keep up with electronification, the random delays in order processing times called “jitter” have gone down to virtually zero, meaning that whoever gets to the next exchange first is guaranteed to come out ahead. At zero jitter, it is not sufficient for a liquidity provider to compete even at the level of the millisecond; even a 1 microsecond delay means that the latency arbitrageur’s gain will be the market maker’s loss. While anyone can be fast, only one person can be the fastest.

“A lot of the tech I’ve been building in the past five years has been about saving half a microsecond, equivalent to 500 nanoseconds,” explains CMT’s CTO Robert Walker. “That edge can be the difference between making money or trading everyone else’s exhaust fumes. It’s a winner-takes-all scenario.”

Therefore, latency arbitrage is harmful because it leads to a situation of natural monopoly that hurts competition. End users pay the price via two transmission mechanisms. One, the latency race has resulted in making constructive passive strategies unprofitable at all but the highest frequencies, forcing market makers to invest in technology to compete on speeds that are irrelevant to actual investors, rather than on research to improve pricing models. This represents a barrier to entry that lowers competition and increases concentration. Virtu’s latest annual report indicates that it spent $176 million on “communication and data processing,” 14% of its 2018 trading revenue, a growing proportion. Secondly, liquidity providers quote wider spreads and reduce order sizes in order to recoup their expected losses against latency arbitrageurs; an effective subsidy from end users to the fastest aggressive strategies.

Ironically, many high-frequency traders abhor the speed game. High-frequency trading firm XTX explained in a comment to the CFTC that “the race for speed in trading has reached an inflection point where the marginal cost of gaining an edge over other market participants, now measured in microseconds and nanoseconds, is harming liquidity consumers.” The latency problem is a prisoner’s dilemma that leads to over-investment. “We would both be better off not spending millions of dollars on latency, but if you do invest and I don’t, then I lose for sure.”

Latency arbitrageurs are sometimes market-making firms themselves that, having been forced to invest in speed, naturally start putting that expensive technology to more aggressive uses. Latency arbitrage is a behaviour; it does not map to a monolithic class of trading firms.

Where does crypto stand today?

Crypto trading is a web-based industry with broadly equal access. For now.

The ethos of crypto is that anyone can participate, big or small. In my opinion, the ability for anyone to devise a trading strategy, connect to an exchange and give it a go is up there in the industry’s psyche with the motto “Be your own bank.” However, just as it happened to mining, trading professionally is rapidly becoming the preserve of the biggest firms.

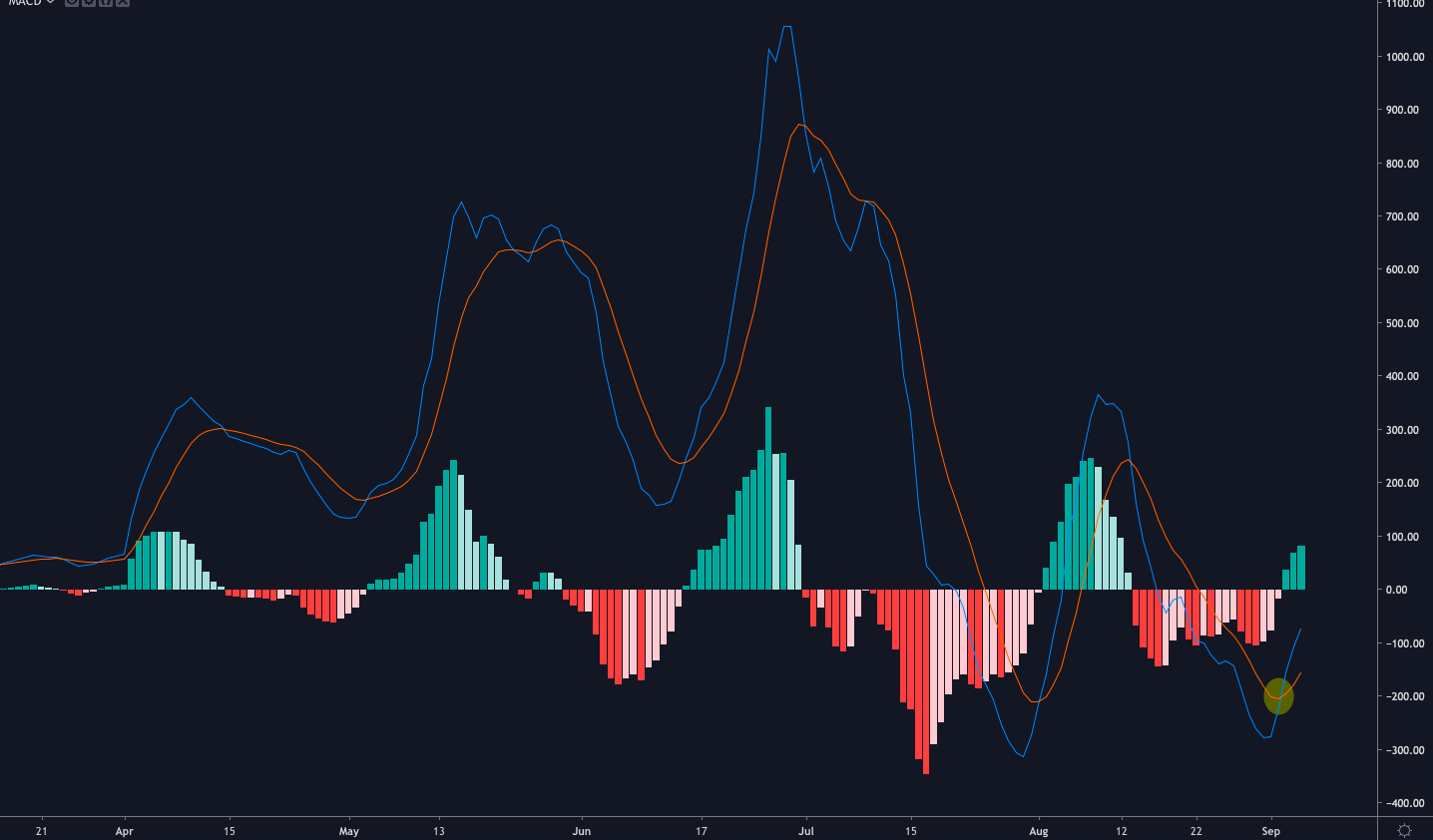

Today, most crypto exchanges are essentially websites. This is the only way to support many thousands of connections concurrently and maintain equal access. The nature of web technology means that “jitter” cannot be reduced much – the web is parallel, not single-thread. This acts as a natural barrier against latency arbitrageurs: a single-digit millisecond latency advantage in getting from Binance to Bitstamp is less advantageous if the internals of the exchange add a random jitter of several milliseconds. Below is a sample of latencies, in milliseconds, seen by B2C2 on a well-known crypto exchange over a period of 5 minutes:

Because it is not possible to run a low-latency, low-jitter exchange in a web infrastructure, combining the two implies that access must be tiered – with the result that only specialist firms such as B2C2 will benefit from the fastest, most expensive connectivity options. Note that the main tech problem faced by crypto exchanges is one of concurrent connections at peak load, when crypto is on the move and thousands upon thousands of users suddenly connect simultaneously. Compare to Amazon’s website around Christmas, not to the NYSE; the NYSE does not see a 10x increase in connected users when stocks are volatile. The main complaint that traders have against BitMEX, arguably the most successful crypto exchange, is not about latency but that the exchange rejects orders under heavy load.

The first exchange to offer a co-location service was OKCoin in 2014, although it is said that no one actually used the service. Newer exchanges that hoped to attract institutional traders are more likely to offer co-location, or at least bells and whistles such as FIX connections: that is the case at Gemini, itBit and ErisX. Unsurprisingly, conventional venues such as the CME offer such services for their crypto offering by way of business.

To this day, several crypto exchanges are investing in speed technology in order to court new types of users. In the short run, perhaps the next 12 months, it is likely that latencies will shrink significantly in crypto. To conjure an informed view of the longer term, though, we need to look at what is happening right now in conventional markets, which we will do in the next installment.

Man in front of trading numbers screen image via Shutterstock

Crypto and the Latency Arms Race: Towards Speed Bumps and OTC Trading

The post appeared first on Crypto Asset Home