Abstract: In this piece, 100x Group grantee Calvin Kim announces the success in speeding up Bitcoin’s Initial Block Download (IBD) using the Utreexo client. While the speed improvement can vary depending on one’s local hardware and bottlenecks, the initial download and verification can be up to 62% faster compared to Bitcoin Core. Since many optimisations are yet to be implemented, the speedup is expected to increase. Calvin goes on to talk about how the IBD can be split up into multiple tasks, and therefore conducted by multiple computers. Eventually the plan is to implement Utreexo in C++ and get it merged into Bitcoin Core. While this may be a long way off, the C++ implementation has already been started.

I’m happy to announce that the Utreexo project is ready to share our improvements on increasing the speed of Bitcoin’s full Blockchain validation process, the Initial Block Download (IBD). We have finished implementing an alpha version of an Out of Order Block Validating Node based on utcd, our fork of btcd (a bitcoin node written in Go) and have benchmarked this node against BItcoin Core version 21.0.0.

In our testing, we were able to complete the IBD around 62% faster compared to Bitcoin Core version 21.0.0 (in default mode) provided that the user isn’t bandwidth limited. We expect this speed difference to increase as there are more optimizations available that we have not yet implemented. Our Out of Order Block Validating Node also allows for the IBD to be done with multiple computers, offering a unique advantage compared to the other Bitcoin node implementations available today.

A separate post will shortly be published, that explains how we’re able to do Out of Order Block Validation with Utreexo Accumulators.

Why does this speedup matter?

The IBD is the first step in joining the Bitcoin network as a rule enforcer. It is the process of downloading and validating all the blocks from the genesis block in 2009 to today’s block. The act of validating every single block is extremely important as this prevents bad actors from breaking the rules of consensus in the Bitcoin network which may include:

- Making coins out of thin air.

- Changing the maximum supply of Bitcoin.

- Stealing other’s coins.

It is possible to participate in the Bitcoin network by trusting third party nodes (ex: nodes run by exchanges, third party wallets, etc). However, this can hurt both the user and the Bitcoin network as a whole. The user can be hurt as the trusted third party may defraud the user of their funds. The Bitcoin Network as a whole can also be hurt because if there aren’t enough users running full nodes in the network to ensure rules are being followed, the rule breakers may be able to break the consensus rules in the Bitcoin network without detection. The role of participating in the Bitcoin network as a rule enforcer cannot be overstated, both for the user’s own safety and for the safety of the network as a whole. To join the Bitcoin network as a rule enforcer, the IBD is the first step that everyone must complete.

Because of this, it is greatly beneficial for the network and users if the IBD is minimized as an entry barrier for participating as a ruler enforcer. This means that one should be able to complete the IBD and become a rule enforcer as quickly and as easily as possible. Current testing and progress with the Utreexo accumulator indicates that the entry barrier for becoming a ruler enforcer will be significantly reduced.

Benchmarks

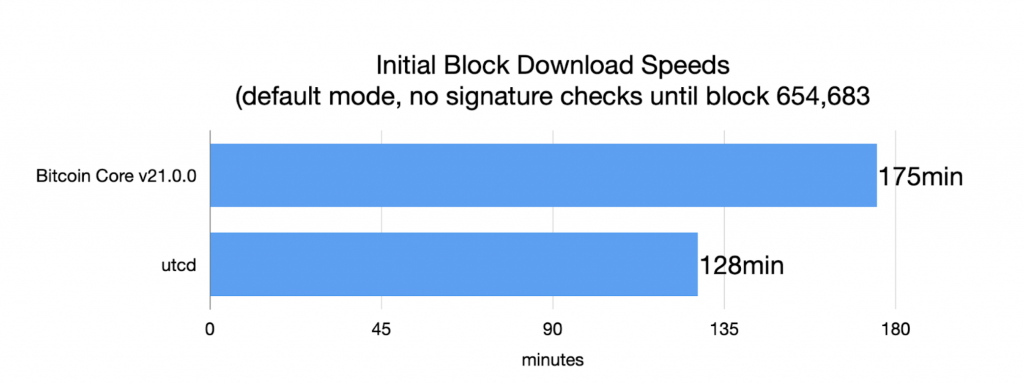

The benchmark was done with the data served from a local node with a gigabit connection.

| CPU | Ryzen 3600 |

| Memory | Samsung 32GB DDR4 2666MHz |

| Storage | 1TB HP SSD EX950 M.2 NVMe |

| Bitcoin Core version | v21.0.0 |

| Linux Kernel Version | 5.7.19 |

| bitcoin.conf settings | -dbcache=24000-maxmempool=1000 |

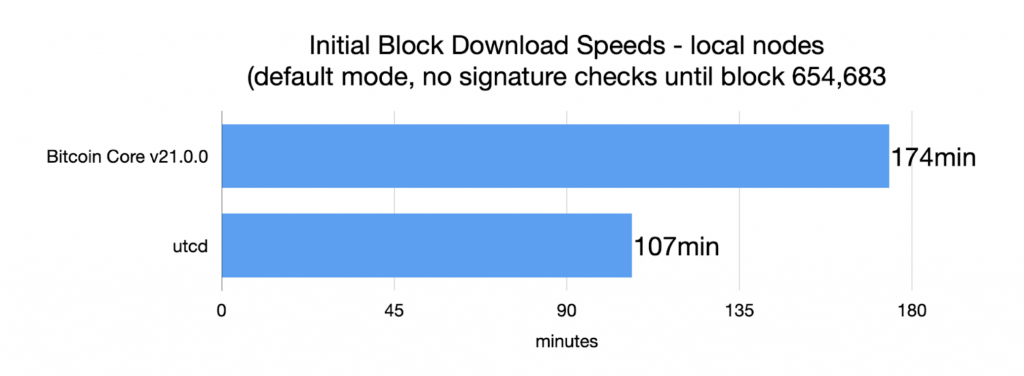

Over a gigabit connection, utcd was able to IBD roughly 36% faster than Bitcoin Core v21.0.0. We currently have not implemented Utreexo Proof caching in utcd and utcd is downloading roughly 900GB of data. Over a gigabit connection, this would take around 2 hours at minimum. We know from the original Utreexo paper that with Utreexo Proofs cached, the data required to download would be around 450~500GB. Below is the same IBD test but done with the serving node on the same machine, simulating what the performance would be like when we implement Utreexo Proof caching.

As you can see from the charts, the speed improvements increase to roughly 62% percent.

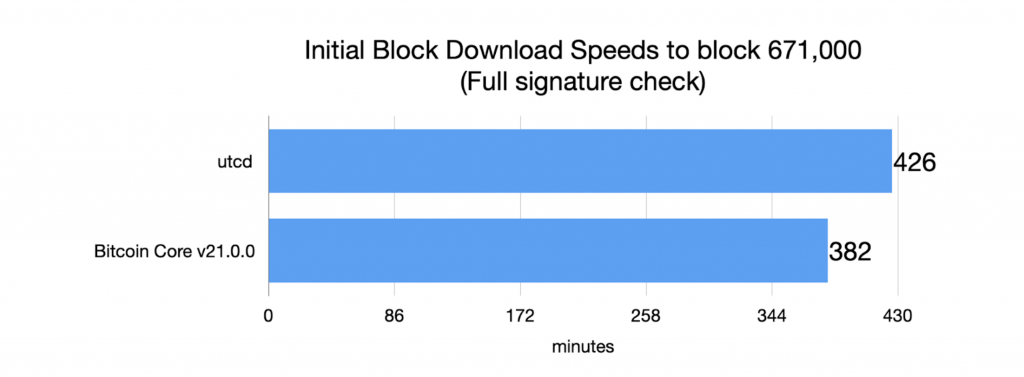

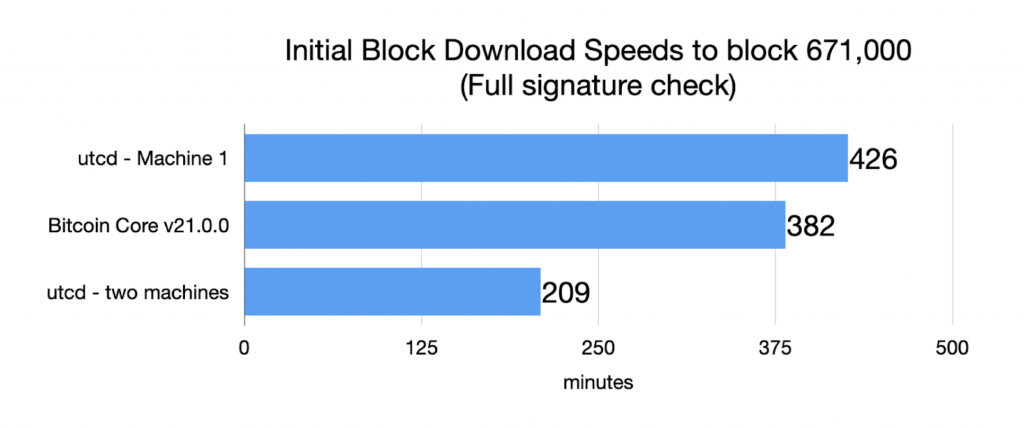

We also benchmarked both nodes with full signature validation.

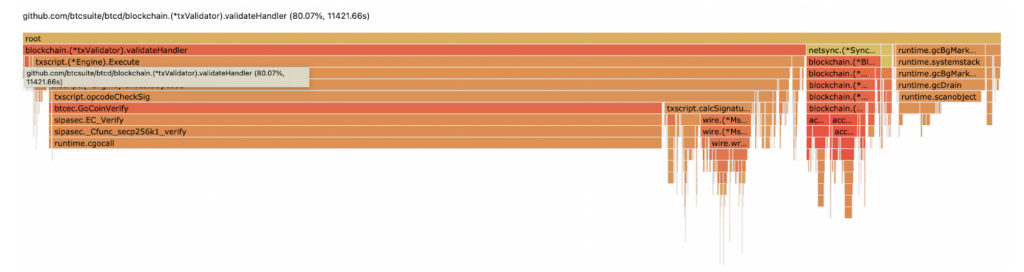

With full signature validation, We can see that utcd is around 15% slower. This is due to the signature checking being a complete bottleneck, taking up around 80% of the time. This can be seen from the flamegraph below.

With both utcd and Bitcoin Core calling libsecp256k1, the 15% increase in time taken makes sense as Go calling into C (cgo) has a non-negligible overhead.

While Utreexo Accumulators did not help IBD speeds when verifying all signatures in my testing, this won’t be the case in the future with Taproot. Taproot allows all the signatures in a block to be verified in batches (due to the nature of Schnorr Signatures), making signature verification much faster. This will cause the bottleneck to shift towards disk-access over time. Therefore, even though Utreexo Accumulators don’t help when all signatures are verified, we believe they will in the future.

Also, do note that Utreexo Accumulators will help if a user has a CPU with many cores and is thus bottlenecked by the disk access instead. A user running their Bitcoin node on a hard disk drive will also see speed increases as they’ll be bottlenecked by the disk as well.

Multi-machine Initial Block Download Benchmarks

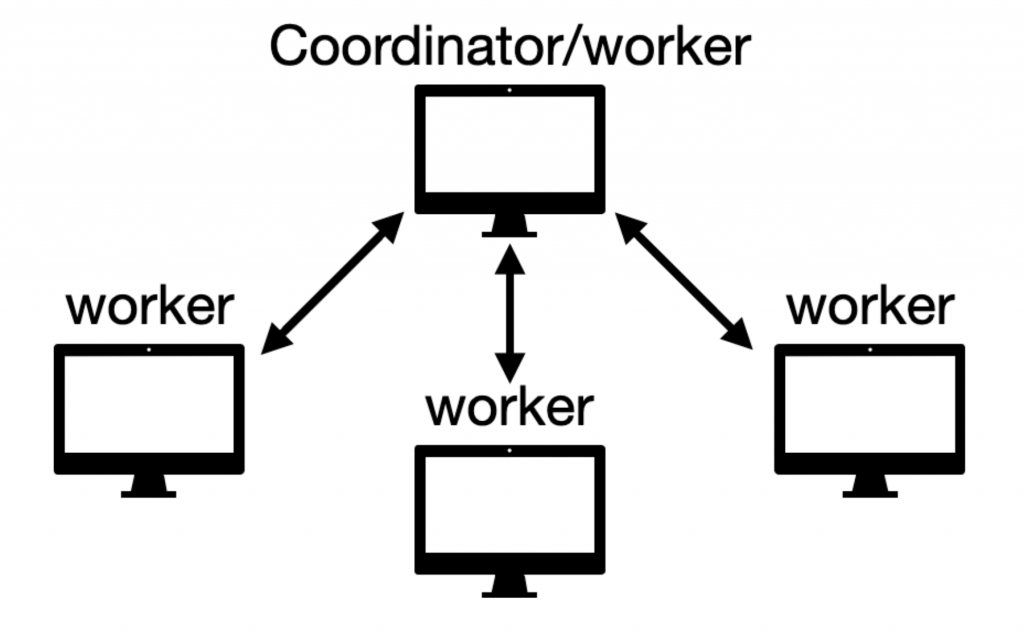

Splitting up work and using multiple compute resources (CPU, GPU, single CPU core, etc) to do the work simultaneously is called Parallel Computing. With Utreexo Accumulators, you can complete IBD with multiple computers. There are two key components to a multi-machine IBD: a coordinator and a worker.

The coordinator’s job is to keep track of what blocks have been verified. It assigns blocks that are not yet validated to a worker. The worker receives blocks it is to validate and returns whether those blocks are valid or not. This back and forth continues until the entire chain is validated.

There only needs to be a single coordinator and this coordinator can be assigned multiple workers.

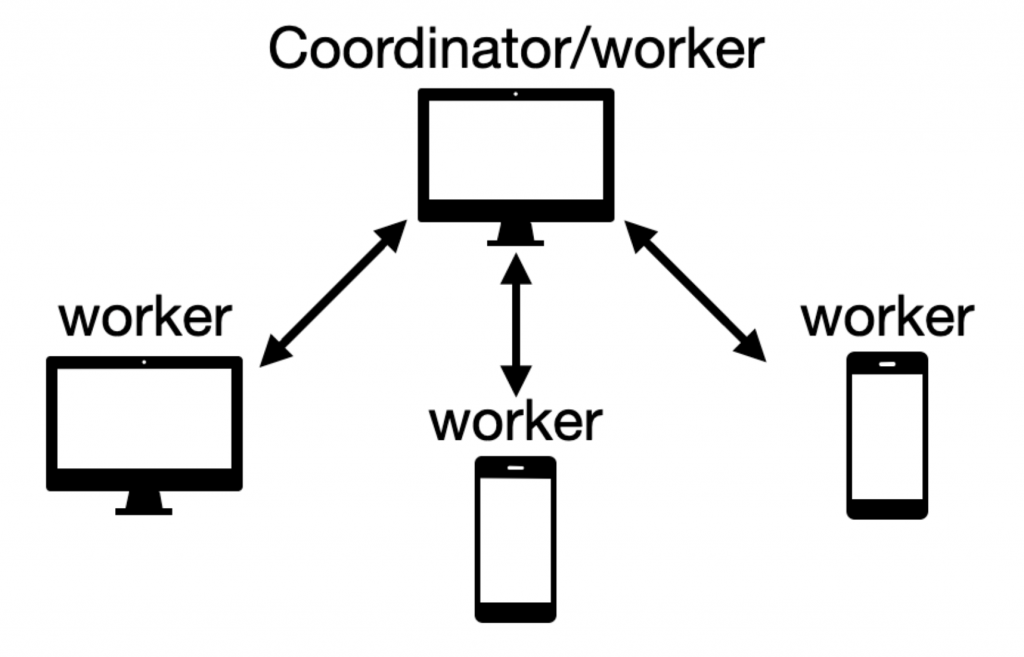

The coordinator machine is also a worker; it validates blocks as well. Three worker computers are connected to the coordinator and they validate blocks in tandem with the coordinator node. It’s important to note that the workers and the coordinators don’t have to be a desktop. They can be any computing device such as a smartphone.

A potential use case for multi-machine IBD could be when we’re not using our phones and laptops. A user can use their phone at night to help their Raspberry PI node sync up. A family also might use all their available computing devices in tandem to complete the IBD.

The below benchmarks are the result of two machines working in tandem to complete the IBD.

Machine 1

| CPU | Ryzen 3600 |

| Memory | Samsung 32GB DDR4 2666MHz |

| Storage | 1TB HP SSD EX950 M.2 NVMe |

Machine 2

| Macbook Pro M1 – 8GB Unified Memory (MacBookPro17,1) |

With two machines, we were able to complete the IBD with full signature checking faster than Bitcoin Core v21.0.0.

Adding more workers

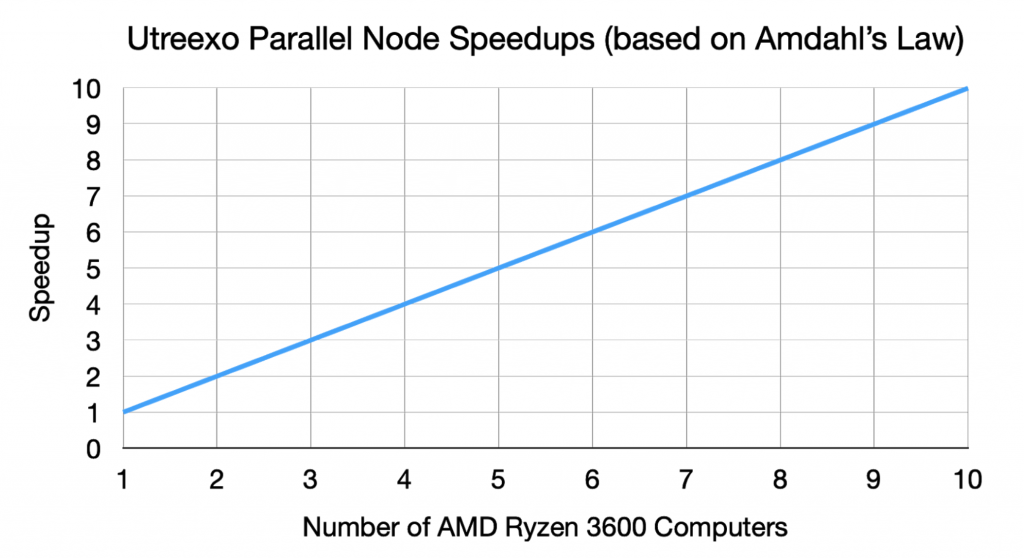

In multi-machine IBD, there must be a coordination of tasks between all the workers and the coordinator. This in turn adds more work to be done. The amount of time taken for this coordination is called Parallel Overhead. If the Parallel Overhead takes a long time, it puts a limit to how much you can parallelize a task.

The Parallel Overhead for Out of Order Block validation is the headers download. Over a gigabit connection, the headers download took 4.403 seconds. Since the entire time taken for the IBD is 426 minutes (for full signature checking), the percentage that can be parallelized is 99.982%. We can use this information to see what the expected maximum speedups are when using more computers with Amdahl’s Law.

Speedups are almost perfect (as in one more machine speeds it up by 1x) with a reasonable number of AMD Ryzen 3600 computers. Below linear graph shows this well.

The headers download, which is the only sequential part of the IBD with Utreexo Accumulators, is needed to provide the Median Time Past for transactions with Check Sequence Verify (among other things). The current implementation today has all the machines used in Multi-machine IBD do a separate headers download. We plan to put this data in the Utreexo Proofs to get rid of the headers download for all workers so that only the coordinator node has to do so. This will increase the speed benefits with even more computing resources.

Limitations of the Out of Order Block Validation

Since IBD with Utreexo accumulators requires extra data to be sent over (approximately 20%), this may increase the time taken for IBD for users whose internet speed is already a bottleneck. However, for users whose internet connection is not a bottleneck and have 20% more bandwidth to spare, they will benefit greatly from the Out of Order Initial Block Download. There is also progress made in the Utreexo Accumulator research that would greatly reduce how much extra data you need to download. More on this in the Future work section.

Future work

Smaller Utreexo Proofs

The biggest downside of using Utreexo Accumulators is that the Utreexo Proofs are not small. This both increases the size that an archival Utreexo node has to store and increases the bandwidth usage of Utreexo nodes. A recent paper by Bolton Bailey and Suryanarayana Sankagiri shows that we’re not as efficient as we could be in batching Utreexo Proofs. In their paper, they show that they were able to reduce the proof sizes by 4.6 times. This savings would help lessen the increase of storage and bandwidth usage.

Tadge Dryja has already drafted out a new Utreexo accumulator design based off of the findings of Bolton Bailey and Suryanarayana Sankagiri and we will be implementing this new design this year.

Clairvoyant caching

The existing caching algorithm explored in the Utreexo paper reduces the extra data downloaded to by around 20%. However, we can do better than this with a theoretically optimal caching algorithm, or a clairvoyant caching algorithm. This algorithm is usually impossible to implement since it requires the knowledge of future events. However, with IBD, peers we’re connected to know exactly what happens in the future, thus this algorithm can be implemented. This project is currently worked on by Claire Bao and Tadge Dryja.

C++ Implementation

The ultimate goal of the Utreexo project is to have the functionality of the Utreexo Accumulators included in Bitcoin Core. However, since the reference Utreexo Accumulator codebase is written in Go, an implementation in C++ has to be written. This project is called libutreexo and is led by Niklas Gögge. He’s also working on a fork of Bitcoin Core with libutreexo, exploring how the Utreexo Accumulators can fit into the codebase of Bitcoin Core.

Verifying my claims

The code that I used to run the benchmarks are available at github.com/mit-dci/utcd. Please refer to the readme in the repo to replicate the tests that I have done here.

Conclusion

The ability to validate the entire history of the Bitcoin Blockchain is extremely important as it strengthens both the user’s own security as well as the security of the Bitcoin Network as a whole. With Utreexo Accumulators, I believe we are able to make it easier for users to do so. While there is still much work to do before it is usable by an end user, we’ve come a long way since the inception. Please join us at github.com/mit-dci/utreexo if you’d like to join us in making the Blockchain validation faster.

Related

The post appeared first on Blog BitMex